Structured and Unstructured Storage

Purpose

This page covers notes, labs, personal setup items, deviations, etc. covered in the Design a data storage solution for non-relational data Learn module as well as the relational data design.

-

Design a data storage solution for non-relational data

- Recommend a data storage solution to balance features, performance, and cost

- Design a data solution for protection and durability

- Recommend access control solutions for data storage

Non-Relational Data

- Semi-structured Data: Structured (has standards) but flexible. e.g. HTML, JSON, XML

- Unstructured: Blobs

Azure Storage Accounts

- Blob storage for large volumes of images and multimedia files

- Azure Files for user level access/distribution. e.g. SMB, NFS, REST

- Queue Storage for large number of messages to enable other workers/systems to process the data

Data Redundancy

- Consider locations (data ingress/egress) and compliance

- Regional items are typically governed by geographical region. E.g. If you have

West US,Central USandEastern Earope, there are two (US and Europe) compliance locations- Paired regions should take these boundaries into account, but if there are tighter ones then watch out for West vs East regional restrictions

- Data Isolation

- Regional items are typically governed by geographical region. E.g. If you have

- Data retention

- Possible need to offload logs to Azure Monitor Logs for retention and archiving.

- Resilience

- LRS, ZRS, GRS, etc. storage accounts along with robust backup policies

- Replication is NOT backup

- Tier the storage accounts according to compliance and replication

- LRS, ZRS, GRS, etc. storage accounts along with robust backup policies

- Administrative overhead

- Document- document - document

- Create and enforce policies

Blob Storage

- Determine the access tier Hot, Cool, Cold, Archive

- Keep cost in mind and automate tier movement via lifecycle management

- Hot: default tier, cost optimized for access - cost-prohibitive for long term storage (+15 days)

- Cool: cost optimized for infrequent access - think short term backups and DR data. The ~30 day stuff.

- Cold: cost optimized for very infrequent access - 90 day items

- Archive: optimized for very long term storage and takes hours to rehydrate for access.

- Data must live here for 180+ days or it’s subject to early deletion costs

- Keep cost in mind and automate tier movement via lifecycle management

- Do you need immutability

- Two management mechanisms, time based retention and legal hold

- Set policy for a specific time interval. Objects CAN NOT be modified or deleted prior to policy expiration

- Supported in Hot, Cool and Archive via time-retention policies

- Legal hold applies immutability until the hold is explicitly removed

- Objects can be created and read but not modified or deleted

- Premium Blob Storage uses legal hosts to support immutable

- Two management mechanisms, time based retention and legal hold

Blob Best Practice Considerations

- Disable anonymous read access to containers and blob

- apply ARM lock to prevent loss

- Disable traffic to the public endpoint

- Create private endpoints

- Firewall/allow traffic appropriately

- Authorization recommendations

- RBAC

- Don’t use an account key

- If you muse, use key vault

- Disallow shared key

- Blocks Service and Account SaS tokens

- User delegated SaS is permitted. Microsoft recommends using a user delegation SAS when possible for superior security.

- Tighten up TLS versions

Design for Azure Files

- Easy access for User/OS systems via SMB or NFS (linux only)

- restrictions apply, see your documentation for details

- Program/web/service access via API

- Designed to replace NAS for files servers

- Globally redundant

- Transmitted via HTTPS

Files Design Considerations

- Azure file shares or Azure File Sync

- File Sync for if there are server systems (typically on-prem but not necessarily) that need to be included/available

- File Shares for direct access to SMB shares in the cloud

- Need access outbound on port 445 (some ISPs may restrict this)

- Performance

- There are a slew of considerations, basically, if latency access via adjacent systems/services (VDI) is a consideration then go with premium. If not, consult the documentation and perform tests

- Probably a good idea to also do segmentation to separate access tiers and optimize costs (watch out for admin overhead)

- Files Documentation > Performance, scale and cost > scalability and performance targets

- There are a slew of considerations, basically, if latency access via adjacent systems/services (VDI) is a consideration then go with premium. If not, consult the documentation and perform tests

- Tiers

- Premium - SSD for database and web. Accessed via SMB and NFS

- Transaction optimized - HDD standard storage hardware

- Hot access tier - HDD standard storage hardware. General purpose file shares like Teams

- Cool access tier - cost efficient archive scenarios

- Azure NetApp Files

- Your high performant NAS in the cloud

- Also accessible via SMB and NFS

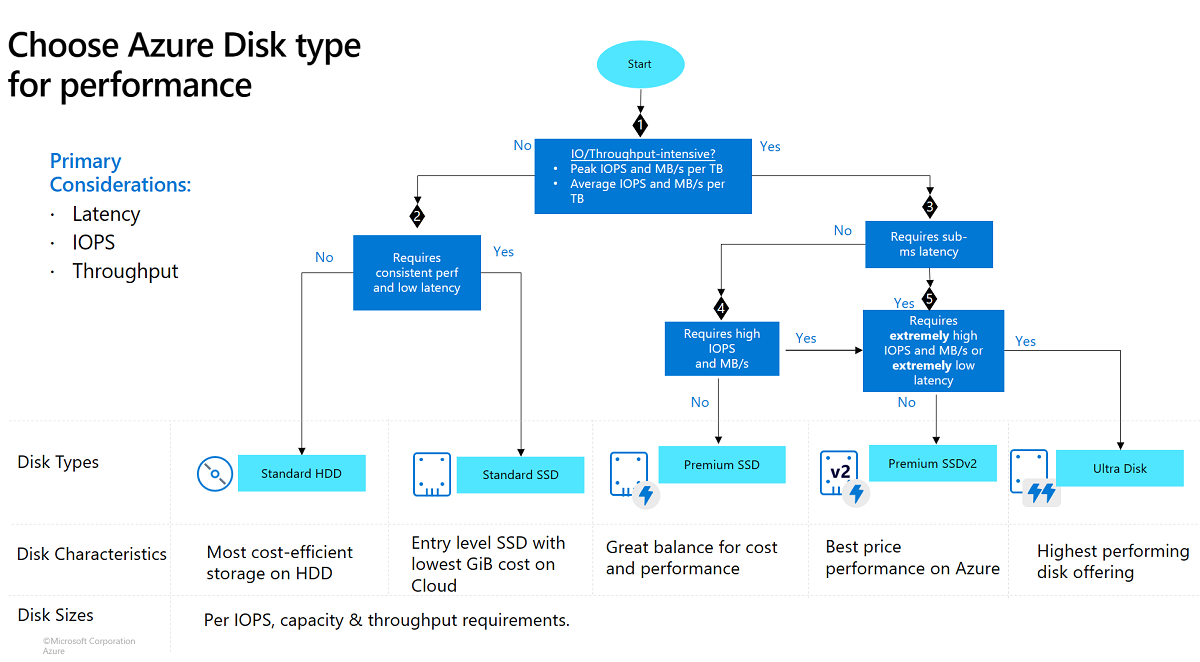

Design for Azure managed disks

Different types of disks

| Ultra disk | Premium SSD v2 | Premium SSD | Standard SSD | Standard HDD | |

|---|---|---|---|---|---|

| Disk type | SSD | SSD | SSD | SSD | HDD |

| Scenario | IO-intensive workloads such as SAP HANA, top tier databases (for example, SQL, Oracle), and other transaction-heavy workloads. | Production and performance-sensitive workloads that consistently require low latency and high IOPS and throughput | Production and performance sensitive workloads | Web servers, lightly used enterprise applications and dev/test | Backup, non-critical, infrequent access |

| Max disk size | 65,536 GiB | 65,536 GiB | 32,767 GiB | 32,767 GiB | 32,767 GiB |

| Max throughput | 10,000 MB/s | 1,200 MB/s | 900 MB/s | 750 MB/s | 500 MB/s |

| Max IOPS | 400,000 | 80,000 | 20,000 | 6,000 | 2,000, 3,000* |

| Usable as OS Disk? | No | No | Yes | Yes | Yes |

Different types of encryption

- Azure Disk Encryption: encrypts the VHD and is only accessible by the VM that owns the disk

- Basically it’s Bitlocker

- Server-Side Encryption: Done at the physical disk in the DC. Encryption at rest prevents pulling the drive and reading the contents (file shares, vhd files, etc.)

- Encryption at host: an extension to the server-side encryption. All temp and cache disks are encrypted at rest and in transit as well

Design for storage security

- Pay attention to the security baseline options and try to follow them at a minimum.

- They can be improved on and ignored as needed

- Shared Access Signatures are pretty slick, just don’t forget to govern them by access policies. Otherwise they are immutable.

- Service endpoints, firewall access, VNET access, etc. are all covered in AZ-104

- Gain encryption control with customer-managed keys

Relational Data

-

Structured Data: Data that has a defined scheme with tables, rows, columns, keys, etc. e.g. relational databases

-

Design a data storage solution for relational data

- Recommend database service tier sizing

- Recommend a solution for database scalability

- Recommend a solution for encrypting data at rest, data in transmission, and data in use

| Compare | SQL Database | SQL Managed Instance | SQL Server on Azure Virtual Machines |

|---|---|---|---|

| Scenarios | Best for modern cloud applications, hyperscale or serverless configurations | Best for most lift-and-shift migrations to the cloud, instance-scoped features | Best for fast migrations, and applications that require OS-level access |

| Features | Single database- Hyperscale storage (for databases up to 100 TB)- Serverless compute- Fully managed serviceElastic pool- Resource sharing between multiple databases for price optimization- Simplified performance management for multiple databases- Fully managed service | Single instance- SQL Server surface area (vast majority)- Native virtual networks- Fully managed serviceInstance pool- Pre-provision compute resources for migration- Cost-efficient migration- Host smaller instances (2vCore)- Fully managed service | Azure Virtual Machines- SQL Server access- OS-level server access- Expansive version support for SQL Server- Expansive OS version support- File stream, Microsoft Distributed Transaction Coordinator (DTC), and Simple Recovery model- SQL Server Integration Services (SSIS), SQL Server Reporting Services (SSRS), and SQL Server Analysis Services (SSAS) |

Azure SQL Database

PaaS solution that removes the OS and Server instance layers from the customer.

- Scalable and serverless

- SQL Database can autoscale and the only offering that supports large databases up to 100 TB

- Elastic pools cut costs by sharing compute and storage resources across databases

- Supports Active geo-replication

Two pricing options for SQL Database

- DTU model (Database Transaction Unit)

- Pre-configured bundle of compute + storage + IO

- scaled in specific steps

- inflexible but simple

- vCore model

- Independent compute, storage and IO scaling

- complex but flexible

- Microsoft recommended

- Three tiers or pricing structures

- General Purpose

- Business Critical

- Hyperscale

Active Geo-replication is a reliability feature for Azure SQL database. It’s a pretty standard HA setup where the details are specifc to the Azure SQL product.

- Load balancer in front

- SQL Primary replicates to Secondary

- Application read/write to Primary SQL

- Application read-only to Secondary

- Failover event triggers traffic to route to secondary region

- Application seamlessly switches

- SQL switches from read-only to read/write.

All data is replicated as read-only from the primary region to the secondary. The application can be configured to read-write to Primary, and read to Secondary. When an event triggers fail-over, the application seamlessly switches to Secondary and secondary instance changes to read-write.

Azure SQL Database Scalability

- Supports dynamic scalability

- Keep in mind at pricing model will introduce downtime (DTU)

- Scale up or out

- Scale up (vertical scaling) is achieved via elastic pools. The compute size for the set of databases is increased/decreased

- Ideal for unpredictable and variable usage

- Scale out (horizontal) has two techniques to scale

- Sharding: split up your DB into smaller components and distribute them across multiple sets

- Read scale-out: offload read-only workloads and use the read-only replica compute to process data.

- Scale up (vertical scaling) is achieved via elastic pools. The compute size for the set of databases is increased/decreased

vCore Model

Comparison table between the vCore service tiers (much more comprehensive here)

| Use Case | General Purpose | Business Critical | Hyperscale |

|---|---|---|---|

| Best for | most workload with scalable compute and storage options | high availability secondary replicas with highest I/O performance | wide variety of workloads scalable storage with more than one high availability secondary replica |

| Storage Size | 1GB-4TB | 1GB-4TB` | 10GB - 100TB |

| Availability | one replica, no read-scale, zone-redundant | Three replicas, one read-scale, zone redundant | zone-redundant |

- Independent compute, storage and IO scaling

- complex but flexible

- Microsoft recommended

- Three tiers or pricing structures

- General Purpose

- The General Purpose tier uses remote storage. The primary replica uses locally attached SSD for the temporary database, tempdb.

- The data and log files are stored in Azure Premium Storage, which is locally redundant storage. Multiple copies are stored in one zone of a region.

- The backup files are stored in Azure Standard Storage, which is RA-GRS by default. It’s globally redundant storage with copies in multiple regions.

- Business Critical

- The highest performance and availability of all Azure SQL service tiers.

- Database availability in the Business Critical tier is like deploying an Always On availability group behind the scenes

- Data and log files all run on direct-attached SSD, which significantly reduces network latency.

- There are three secondary replicas. One secondary replica can be used as a read-only endpoint (at no extra charge).

- Hyperscale

- Azure SQL Database only

- Unique architecture of caches and page servers allows for accessing data from cache instead of the data file on disk.

- The Hyperscale architecture supports databases as large as 100 TB.

- Uses snapshots for nearly instantaneous backups

- Restores take minutes

- General Purpose

DTU Model

- Switching tiers will introduce downtime

- Single databases and elastic pools are billed hourly based on tier/compute size

- Good for a specific and predictable level of performance

eDTUs mitigate some of the rigidity of the regular DTU. Define a boundary for the elastic pool and add/remove eDTUs as needed.

| Basic | Standard | Premium | |

|---|---|---|---|

| Target workload | Development and production | Development and production | Development and production |

| Uptime SLA | 99.99% | 99.99% | 99.99% |

| Backup | A choice of geo-redundant, zone-redundant, or locally redundant backup storage, 1-7 day retention (default 7 days) long term retention available up to 10 years | A choice of geo-redundant, zone-redundant, or locally redundant backup storage, 1-35 day retention (default 7 days) long term retention available up to 10 years | A choice of locally-redundant (LRS), zone-redundant (ZRS), or geo-redundant (GRS) storage 1-35 days (7 days by default) retention, with up to 10 years of long-term retention available |

| CPU | Low | Low, Medium, High | Medium, High |

| IOPS (approximate)* | 1-4 IOPS per DTU | 1-4 IOPS per DTU | >25 IOPS per DTU |

| IO latency (approximate) | 5 ms (read), 10 ms (write) | 5 ms (read), 10 ms (write) | 2 ms (read/write) |

| Columnstore indexing | N/A | Standard S3 and higher | Supported |

| In-memory OLTP | N/A | N/A | Supported |

Azure SQL Manged Instance

Another PaaS option for SQL Databases but it has deeper access into the management layer vs the Azure SQL Database offering.

- Ideal for lift-and-shift mibrations

- Uses the vCores model

- It compares to OS installed SQL Server, not Azure SQL Database

- Provides the largest feature-set of traditional OS SQL Database installs

- Service Broker, CLR, SQL Server Agent, and Linked servers

Azure VM SQL Server

A great option for 1:1 migration via Azure Site Migration. It’s a typical SQL Server running on your OS of choice plus some value add features.

- Lean on Azure services like Blob storage, data lake, synapse analytics, etc.

- Write data directly into Data Lake storage for analytics and reporting

- Licensing options

- SQL management via the portal. e.g. enable/disable SQL features from portal.azure.com

- Automated Patching

- Defender for SQL

- Entra ID authentication

- Best practices assessment

Structured Data Encryption

| Data state | Encryption method | Encryption level |

|---|---|---|

| Data at rest | Transparent data encryption (TDE) | Always encrypted |

| Data in motion | Secure Socket Layers and Transport Layer Security (SSL/TLS) | Always encrypted |

| Data in process | Dynamic data masking | Specific data is unencrypted, Remaining data is encrypted |

- Transparent Data Encryption (TDE) is enabled by default

- Performes encryption in real-time for all data

- TDE performs encryption and decryption of the data at the page level

- Database backups are also encrypted because a backup operation copies the data pages from the database file to the backup device. No decryption is done during the backup operation

- You can use TDE with databases in an Always On Availability Group (AG)

- Azure SQL Database, Azure SQL Managed Instance, and Azure Synapse Analytics enforce Secure Socket Layers and Transport Layer Security (SSL/TLS) encryption always for all connections.

- Data-in-use employs a policy-based security feature called dynamic data masking. This feature hides the sensitive data in the result set of a query over designated database fields, while the data in the database remains unchanged

- Dynamic data masking automatically discovers potentially sensitive data in Azure SQL Database and Azure SQL Managed Instance. The feature provides actionable recommendations to mask these fields

- The data masking policy can be configured in the Azure portal for Azure SQL Database

Azure SQL Edge

- Azure SQL Edge is an optimized relational database engine geared for IoT and IoT Edge deployments

- Azure SQL Edge is a containerized Linux application. The startup-memory footprint is less than 500 MM

- Same security features of SQL Server Enterprise

- Two deployment options

- Connected deployment: For connected deployment, Azure SQL Edge is available on the Azure Marketplace and can be deployed as a module for Azure IoT Edge.

- Disconnected deployment: Disconnected deployment is accomplished through Azure SQL Edge container images. The images can be pulled from docker hub and deployed either as a standalone docker container or on a Kubernetes cluster

- Azure SQL Edge supports solutions that work with, or without, network connectivity

- IoT Edge has a runtime running on the IoT device that allows you to develop a solution that stores locally when offline, then sends to Azure when online

Azure Cosmos DB

- If you currently use Azure Table Storage, you gain many benefits by moving to the Azure Cosmos DB Table API

- Azure Cosmos is just… bigger, badder and better vs Tables in every way